Recent developments in Large Language Models (LLMs) have demonstrated their remarkable capabilities across a range of tasks. Questions, however, persist about the nature of LLMs and their potential to integrate common-sense human knowledge when performing tasks involving information about the real physical world.

This paper delves into these questions by exploring how LLMs can be extended to interact with and reason about the physical world through IoT sensors and actuators, a concept that we term Penetrative AI. The paper explores such an extension at two levels of LLMs' ability to penetrate into the physical world via the processing of sensory signals.

Our preliminary findings indicate that LLMs, with ChatGPT being the representative example in our exploration, have considerable and unique proficiency in employing the knowledge they learned during training for interpreting IoT sensor data and reasoning over them about tasks in the physical realm.

Not only this opens up new applications for LLMs beyond traditional text-based tasks, but also enables new ways of incorporating human knowledge in cyber-physical systems.

Large Language Models (LLMs) cultivated on extensive text datasets have showcased remarkable capabilities across diverse tasks, including coding and logical problem-solving. These out-of-the-box capabilities have demonstrated that they already comprise enormous amounts of common human knowledge (Some studies referred to it as a world model of how the world works).

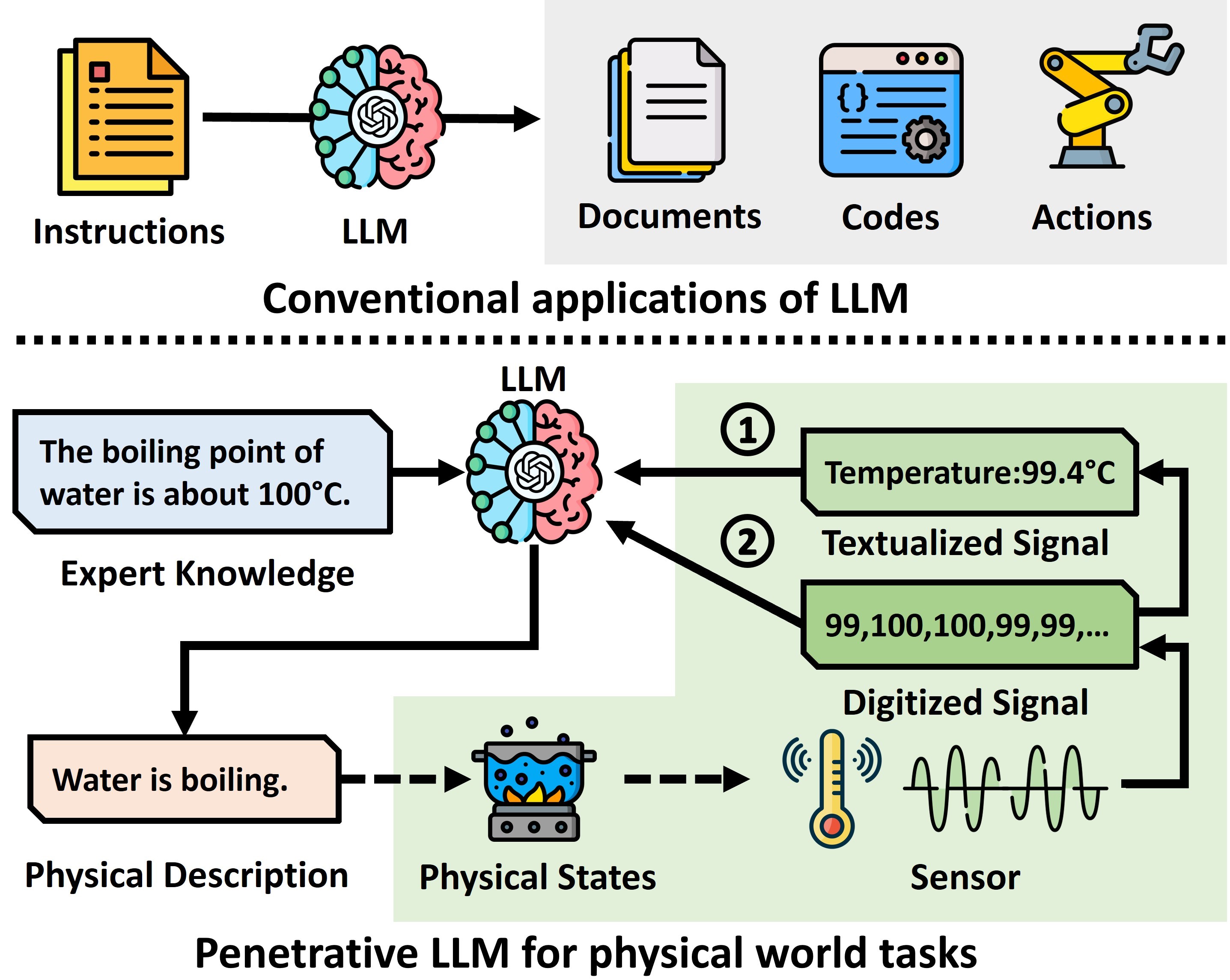

This paper is motivated by an essential and intriguing question: can we enable LLMs to complete tasks in the real physical world? We delve into this inquiry and explore extending the boundaries of LLM capabilities by directly letting them interact with the physical world through Internet of Things (IoT) sensors. A basic example of this process is depicted in Figure 1, where different from the conventional way of LLM usage in natural language tasks, an LLM is expected to analyze sensor data and aided by expert knowledge to deduce the physical states of the object. These sensor and actuator readings are indeed projections from the physical world, and the LLM is expected to harness its common human knowledge to comprehend sensor data and execute perception tasks.

We formulate such a problem from a signal processing's point of view, and specifically explore the LLMs' penetration into the physical world at two signal processing levels of the sensor data:

Textualized-level penetration. LLMs are instructed to process textualized signal derived from underlying sensor data.

Digitized-level penetration. LLMs are guided to digitized signal, essentially numerical sequences of raw sensor data.

We term this endeavor "Penetrative AI" -- where the embedded world knowledge in large language models (LLMs) serves as a foundation, seamlessly integrated with the Cyber-Physical Systems (CPS) for perceiving and intervening in the physical world.

Our methodology is exemplified through two illustrative applications at two different levels, respectively. Next, we will elaborate on the design and experiment results of these two illustrative applications.

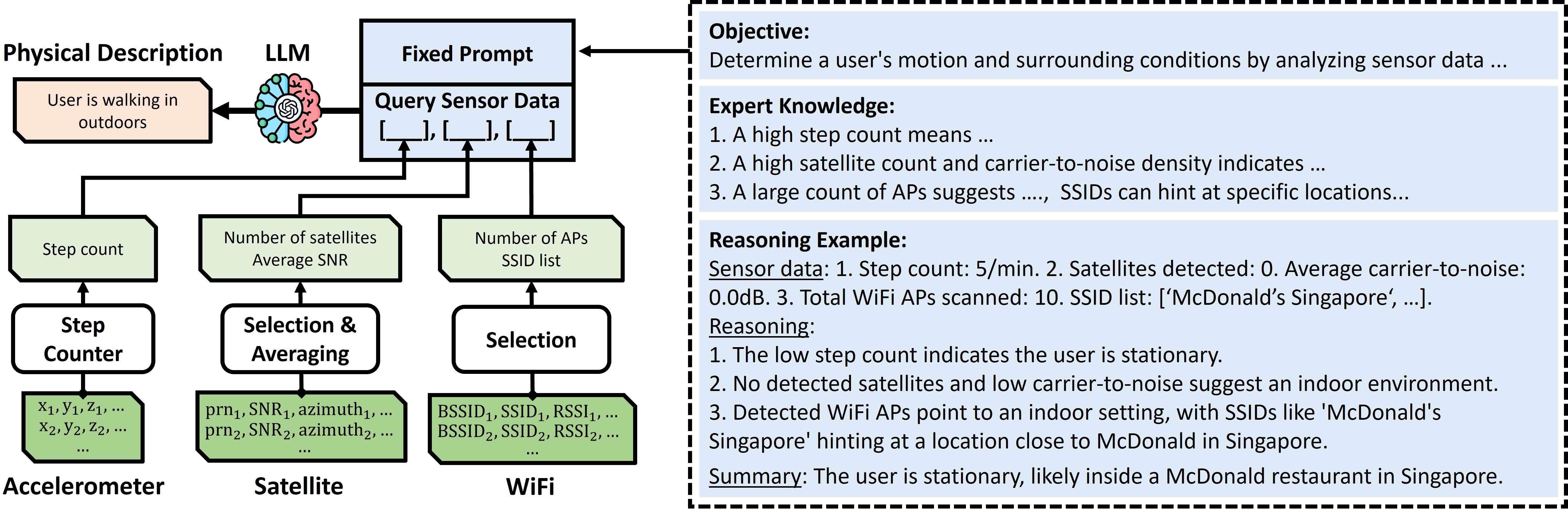

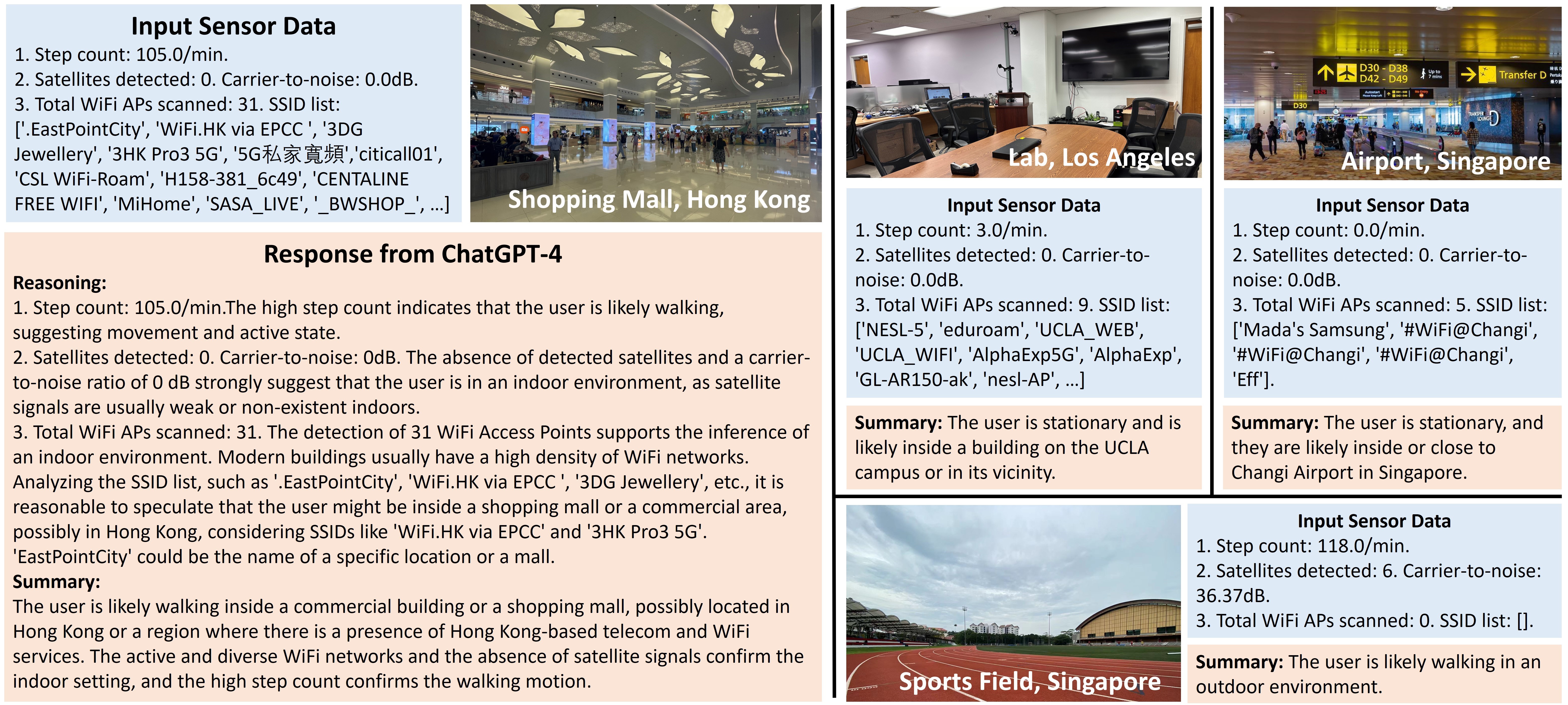

This section describes our effort in tasking ChatGPT, a chosen vehicle, to comprehend IoT sensor data at the textualized signal level.

We take activity sensing as an illustrative example, where we task ChatGPT with the interpretation of sensor data collected from smartphones to derive user activities. The input sensor data encompass smartphone accelerometer, satellite, and WiFi signals, and the desired output is to discern the user motion and environment context.

To facilitate ChatGPT comprehension of the sensor data, we undertake a preprocessing step where raw data from different sensing modules are separately converted into textualized states that are expected interpretable by ChatGPT. A full prompt includes a defined objective and expert knowledge of the sensor data, all in natural language. Essentially, the way we construct the prompt serves as a means to educate and instruct ChatGPT to interpret sensor data.

Our preliminary results suggest LLMs are highly effective in analyzing physical world signals when they are properly abstracted into textual representations.

Check more examples and our prompt at here.

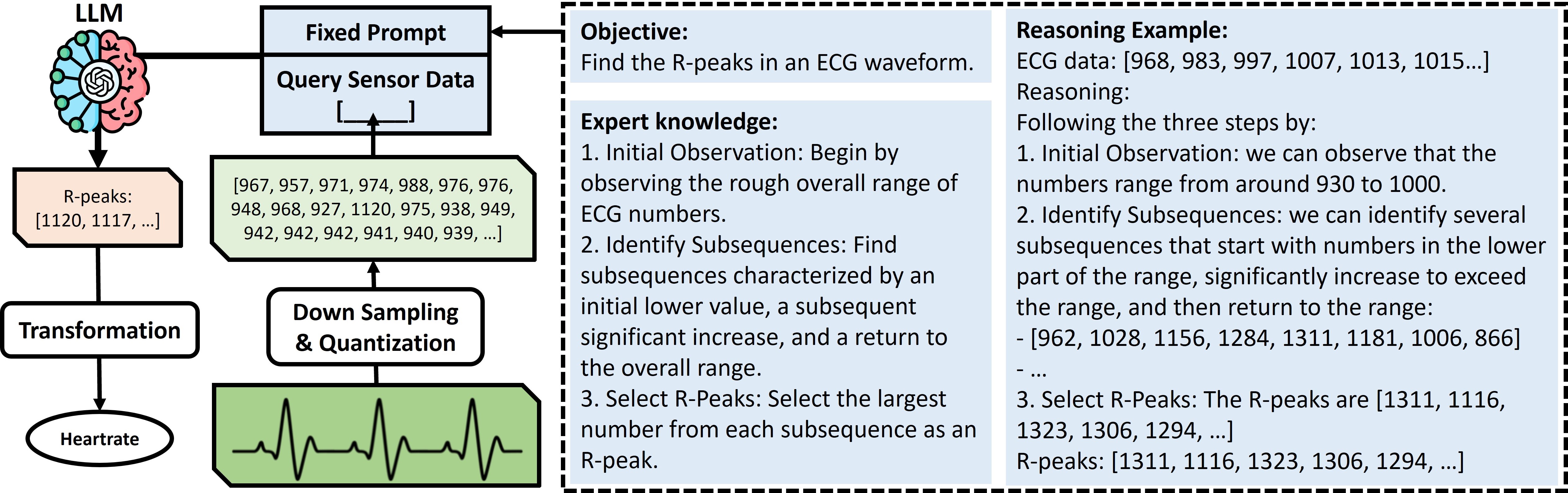

This section describes our effort to go beyond the general expectations of the textualized signal processing ability of LLMs. We specifically study the potential of ChatGPT in comprehending digitized sensor signals.

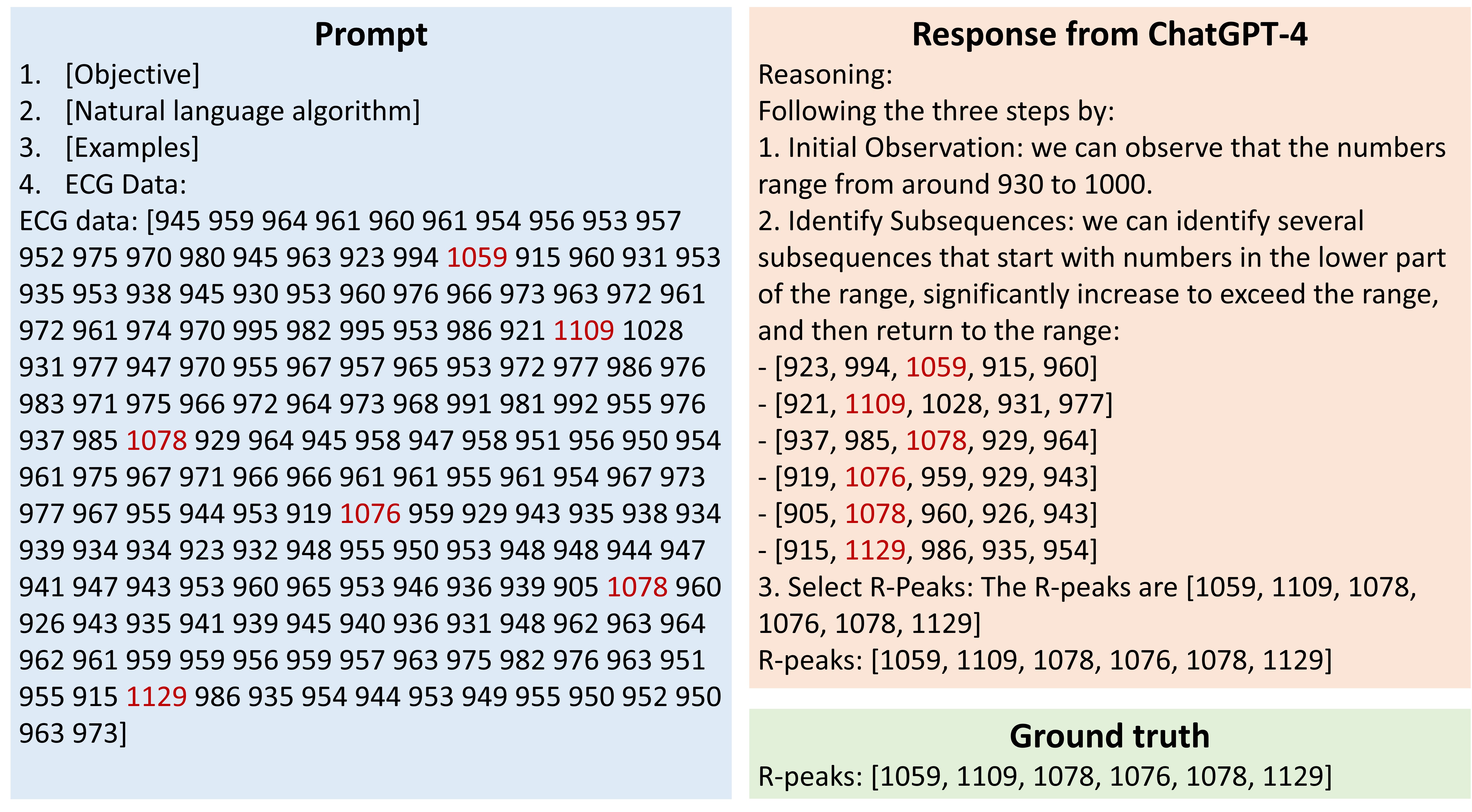

We take human heartbeat detection as an illustrative example, where we task ChatGPT with the input of ECG waveforms to derive the heartbeat rate. Fundamentally different from the previous example, all sensor data in this application are expressed as sequences of digitized samples. Figure 4 provides an overview of the design.

Our prior experiments showed that ChatGPT failed to identify most R-peaks without guidance. However, R-peak selection seems to be an easy task for human. So how do we identify R-peaks? Based on manual observations, we design a natural language-based "algorithm" that LLMs understand to guide the selection of R-peaks:

Initial Observation. Begin by observing the rough overall range of ECG numbers in the provided data.

Identify Subsequences. Find subsequences of numbers that meet the following criteria:

Select R-Peaks. After identifying these subsequences, select the largest number from each subsequence as an R-peak.

We investigate whether ChatGPT can effectively execute such fuzzy logic (without explicit threshold values) when processing the digitized signals. We call this algorithm the 'natural language algorithm' as it is expressed as text.

Our initial findings indicate that LLMs, particularly ChatGPT-4, exhibit remarkable proficiency in analyzing physical digitized signals when provided with proper guidance.

While not achieving perfect accuracy, LLMs exhibit surprisingly encouraging performance, even when dealing with pure digital signals acquired from the physical world. This presents an enticing opportunity to leverage LLMs' world knowledge as a foundation model to derive insights from sensory information while requiring no or little additional task knowledge or data, i.e., in zero or few-shot settings. Such a capability may be equipped with IoT sensors and actuators to build intelligence into cyber-physical systems -- a concept we term "Penetrative AI".

Penetrative AI is concerned with exploring the foundation role of LLMs in completing tasks in the physical world. Two primary characteristics define its scope -- i) the involvement of the embedded world knowledge in LLMs or variations like Vision-Language Models (VLMs) which adapt to other input modalities, and ii) the integration with IoT sensors and/or actuators for perceiving and intervening the physical world.

@misc{xu2024penetrative,

title={Penetrative AI: Making LLMs Comprehend the Physical World},

author={Huatao Xu and Liying Han and Qirui Yang and Mo Li and Mani Srivastava},

year={2024},

eprint={2310.09605},

archivePrefix={arXiv},

primaryClass={cs.AI}

}